An Education-Based System for Two-Way Translations of Sign Language

DOI:

https://doi.org/10.37934/sijcrlhs.3.1.2538Keywords:

Sign language translations, 2D CNN, educational systemAbstract

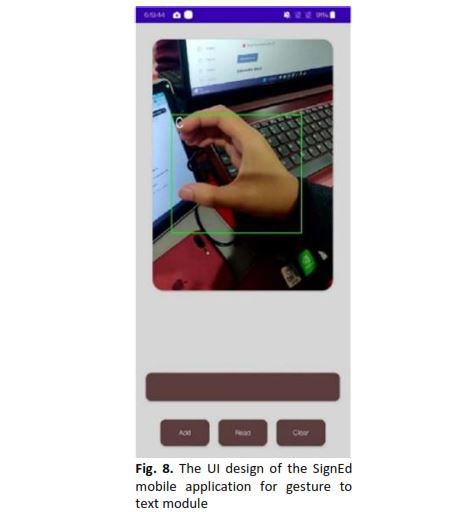

There are approximately 70 million people with hearing disability and at least over 200 sign languages exist. People with hearing disability seldom feel excluded since only few people able to communicate with them. The two-way sign language translator system is a project aimed to aid deaf and hard hearing person to communicate and helping people learning sign language. The research begins with a comparison of various algorithms used in previous studies, aiming to identify the most suitable one. The 2D CNN algorithm is selected and implemented in six steps, including data acquisition from a dataset of 28 different static sign language signs obtained from Kaggle. The data is then pre-processed, and features are extracted and selected, leveraging a pretrained weight of EfficientNetB0 for better generalization. A 2D CNN architecture is utilized for model training, comprising three layers which are 2D global average pooling layer, dropout layer, and fully connected layer with one node. Mean absolute error and the Adam optimizer are employed as the loss function and optimizer, respectively. Fine-tuning the model involves experimenting with different batch sizes and iterations, with a batch size of 32 and 100 iterations yielding the best results. The model's performance is assessed, achieving 97.3% accuracy within 12 trials through Android mobile applications. The model exhibits occasional misclassifications, primarily for certain hand orientations, such as 'I', 'N', 'E', and 'S'. The model is further tested in various scenarios, demonstrating its robustness with 96.4% accuracy in complex backgrounds and a lower accuracy of 60.7% in poor lighting conditions. Moreover, the results indicate that different body mass index (BMI) can influence the model's performance since higher BMI tend to have less joint flexibility. To enhance the system's functionality, future work could focus on continuous signing, dataset expansion, and adaptive learning. These improvements aim to broaden the system's capabilities and ensure more inclusive communication for individuals with hearing disabilities.